How to Improve Crowdsourced Labels for Dialogue Systems

Authors:

(1) Clemencia Siro, University of Amsterdam, Amsterdam, The Netherlands;

(2) Mohammad Aliannejadi, University of Amsterdam, Amsterdam, The Netherlands;

(3) Maarten de Rijke, University of Amsterdam, Amsterdam, The Netherlands.

Table of Links

2 Methodology and 2.1 Experimental data and tasks

2.2 Automatic generation of diverse dialogue contexts

3 Results and Analysis and 3.1 Data statistics

3.2 RQ1: Effect of varying amount of dialogue context

3.3 RQ2: Effect of automatically generated dialogue context

6 Conclusion, Limitations, and Ethical Considerations

7 Acknowledgements and References

A Appendix

In this section we provide supplementary materials used to support our main paper. These materials include: experimental conditions elaborated in Section A.1, quality control measures undertaken to ensure high quality crowdsourced labels and generated supplementary context in Section A.2 and the prompts used to generate the supplementary context in Section A.3. In Section A.4 we include the annotation instructions and screen dumps of our annotation task. Section A.5 shows sample supplementary context generated by GPT-4.

A.1 Experimental conditions

We list the experimental conditions used for our crowdsource experiments in Table 3.

A.2 Data quality control

Generated user information need and summary. To address the potential hallucination of LLMs (Chang et al., 2023), we implemented a quality control process for the generated user information needs and summaries, ensuring their coherence and factual accuracy. We automatically crossreference the movies mentioned in both the input dialogues and the summaries. A summary must contain at least two-thirds of the movies mentioned in the input dialogue to be considered valid. If this criterion is not met, the summary is discarded, and a new one is generated following the specified prompt requirements. In total, we discarded and regenerated 15 dialogue summaries. To further ensure coherence, we randomly sampled 30% of the generated summaries and information needs. The authors reviewed them to confirm their coherence and alignment with the information presented in the input dialogue. This process enhanced the quality and reliability of the generated content.

Crowdsourced labels. To ensure a high quality of the collected data, we incorporated attention checking questions into the HIT. Annotators were required to specify the number of utterances in the dialogues they were evaluating and to identify the last movie mentioned in the system response being evaluated. 10% of the HITs were rejected and returned back to collect new labels. In total, we gathered 1440 data samples from the crowdsourcing task, spanning six variations for relevance and usefulness. We employed majority voting to establish the final relevance and usefulness dialogue label.

A.3 Prompts

In Table 4 we show the final prompts used to generate the user information and dialogue summary with GPT-4.

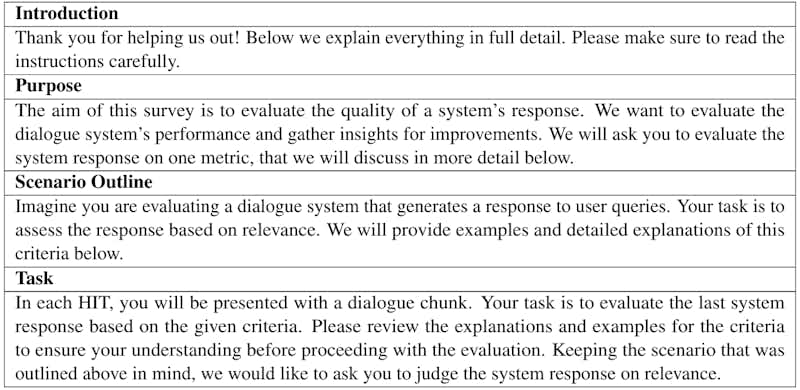

A.4 Annotation instructions and screen dumps

Table 5 details the annotation instructions for the relevance and usefulness evaluations. In Figure 5 and 6 we show the annotation interface used for Phase 1 and Phase 2, respectively.

A.5 Sample supplementary context

In Table 6 we show sample user information need and summary generated by GPT-4.